Rank¶

Ranking of attributes in classification or regression data sets.

Signals¶

Inputs:

Data

An input data set.

Scorer (multiple)

Models that implement the feature scoring interface, such as linear / logistic regression, random forest, stochastic gradient descent, etc.

Outputs:

Reduced Data

A data set whith selected attributes.

Description¶

The Rank widget considers class-labeled data sets (classification or regression) and scores the attributes according to their correlation with the class.

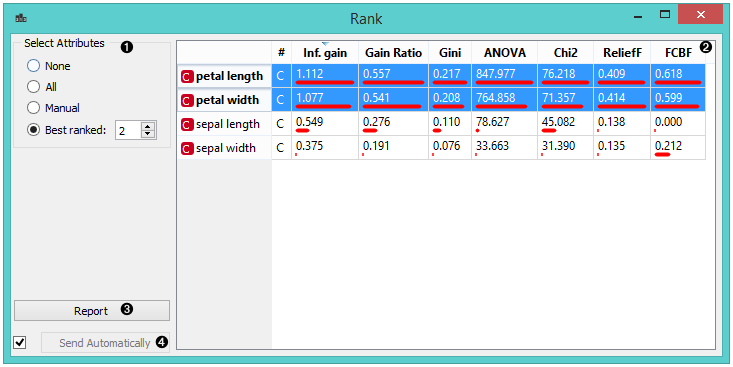

- Select attributes from the data table.

- Data table with attributes (rows) and their scores by different scoring methods (columns)

- Produce a report.

- If ‘Send Automatically‘ is ticked, the widget automatically communicates changes to other widgets.

Scoring methods¶

- Information Gain: the expected amount of information (reduction of entropy)

- Gain Ratio: a ratio of the information gain and the attribute’s intrinsic information, which reduces the bias towards multivalued features that occurs in information gain

- Gini: the inequality among values of a frequency distribution

- ANOVA: the difference between average vaules of the feature in different classes

- Chi2: dependence between the feature and the class as measure by the chi-square statistice

- ReliefF: the ability of an attribute to distinguish between classes on similar data instances

- FCBF (Fast Correlation Based Filter): entropy-based measure, which also identifies redundancy due to pairwise correlations between features

Additionally, you can connect certain learners that enable scoring the features according to how important they are in models that the learners build (e.g. Linear / Logistic Regression, Random Forest, SGD, …).

Example: Attribute Ranking and Selection¶

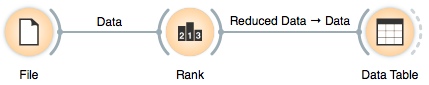

Below, we have used the Rank widget immediately after the File widget to reduce the set of data attributes and include only the most informative ones:

Notice how the widget outputs a data set that includes only the best-scored attributes:

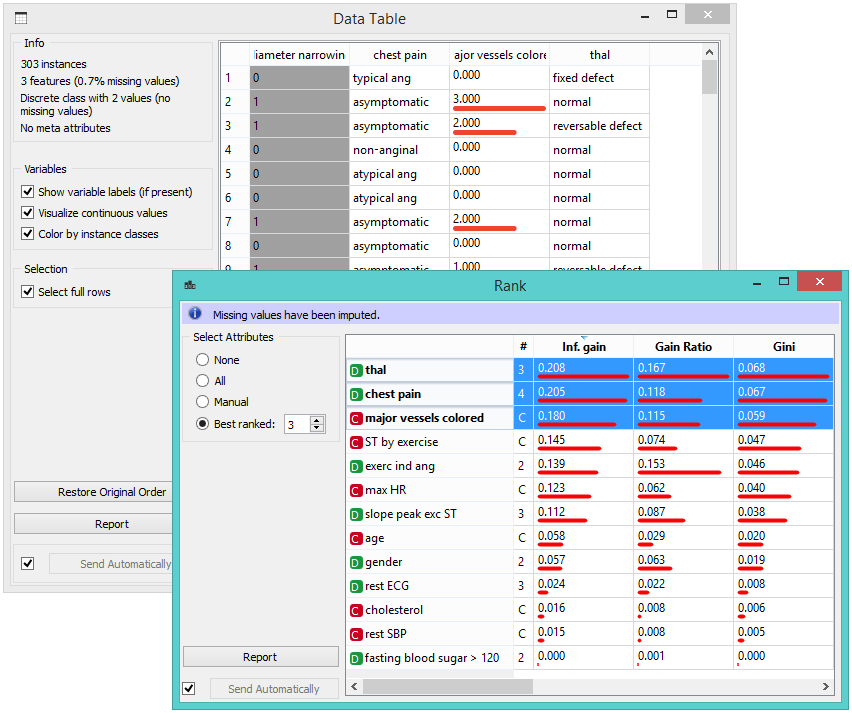

Example: Feature Subset Selection for Machine Learning¶

What follows is a bit more complicated example. In the workflow below, we first split the data into a training set and a test set. In the upper branch, the training data passes through the Rank widget to select the most informative attributes, while in the lower branch there is no feature selection. Both feature selected and original data sets are passed to their own Test & Score widgets, which develop a Naive Bayes classifier and score it on a test set.

For data sets with many features, a naive Bayesian classifier feature selection, as shown above, would often yield a better predictive accuracy.