Nearest Neighbors¶

Predicts according to the nearest training instances.

Signals¶

Inputs:

Data

A data set

Preprocessor

Preprocessed data

Outputs:

Learner

A learning algorithm with supplied parameters

Predictor

A trained regressor. Signal Predictor sends the output signal only if input Data is present.

Description¶

The Nearest Neighbors widget uses the kNN algorithm that searches for k closest training examples in feature space and uses their average as prediction.

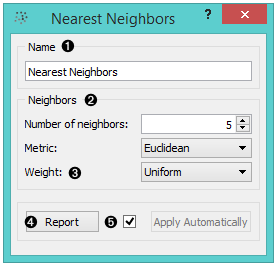

- Learner/predictor name

- Set the number of nearest neighbors and the distance parameter

(metric) as regression criteria. Metric can be:

- Euclidean (“straight line”, distance between two points)

- Manhattan (sum of absolute differences of all attributes)

- Maximal (greatest of absolute differences between attributes)

- Mahalanobis (distance between point and distribution).

- You can assign weight to the contributions of the neighbors. The Weights you can use are:

- Uniform: all points in each neighborhood are weighted equally.

- Distance: closer neighbors of a query point have a greater influence than the neighbors further away.

- Produce a report.

- Press Apply to commit changes.

Example¶

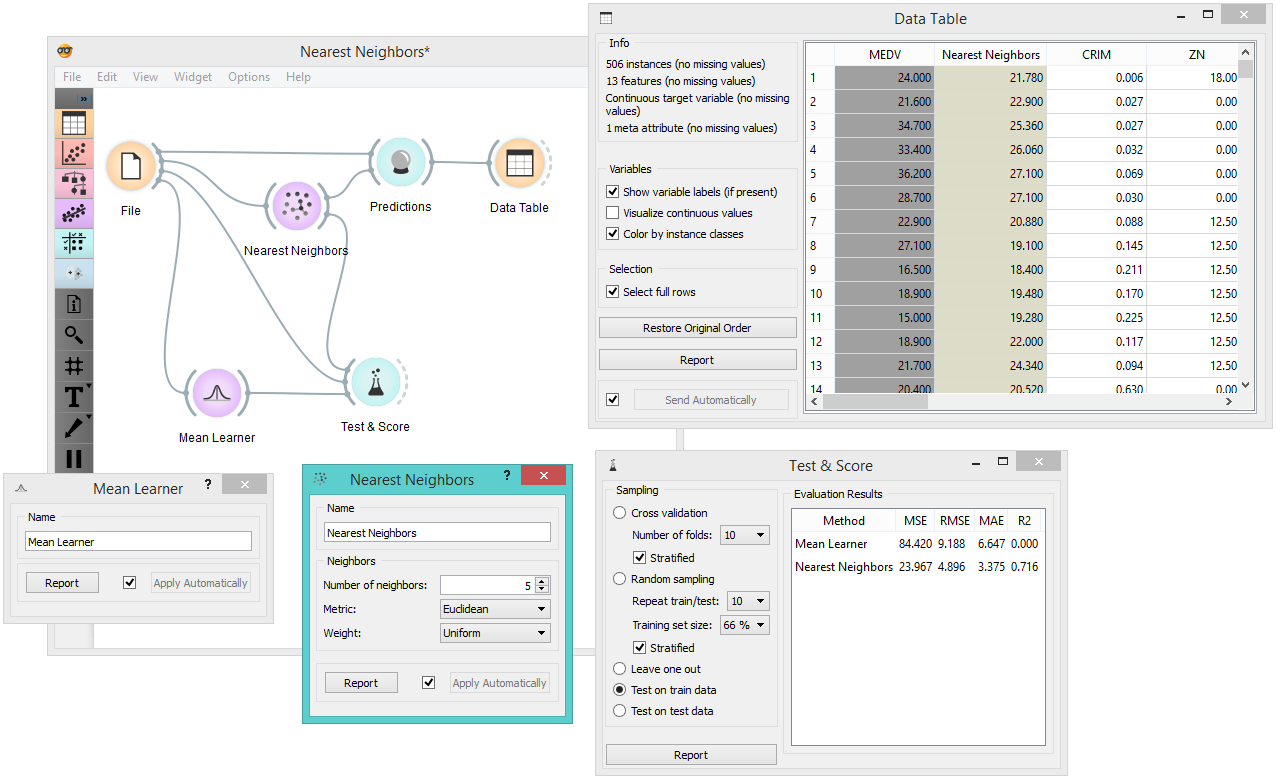

Below, is a workflow showing how to use both the Predictor and the Learner output. For the purpose of this example, we used the Housing data set. For the Predictor, we input the prediction model into the Predictions widget and view the results in the Data Table. For Learner, we can compare different learners in the Test&Score widget.